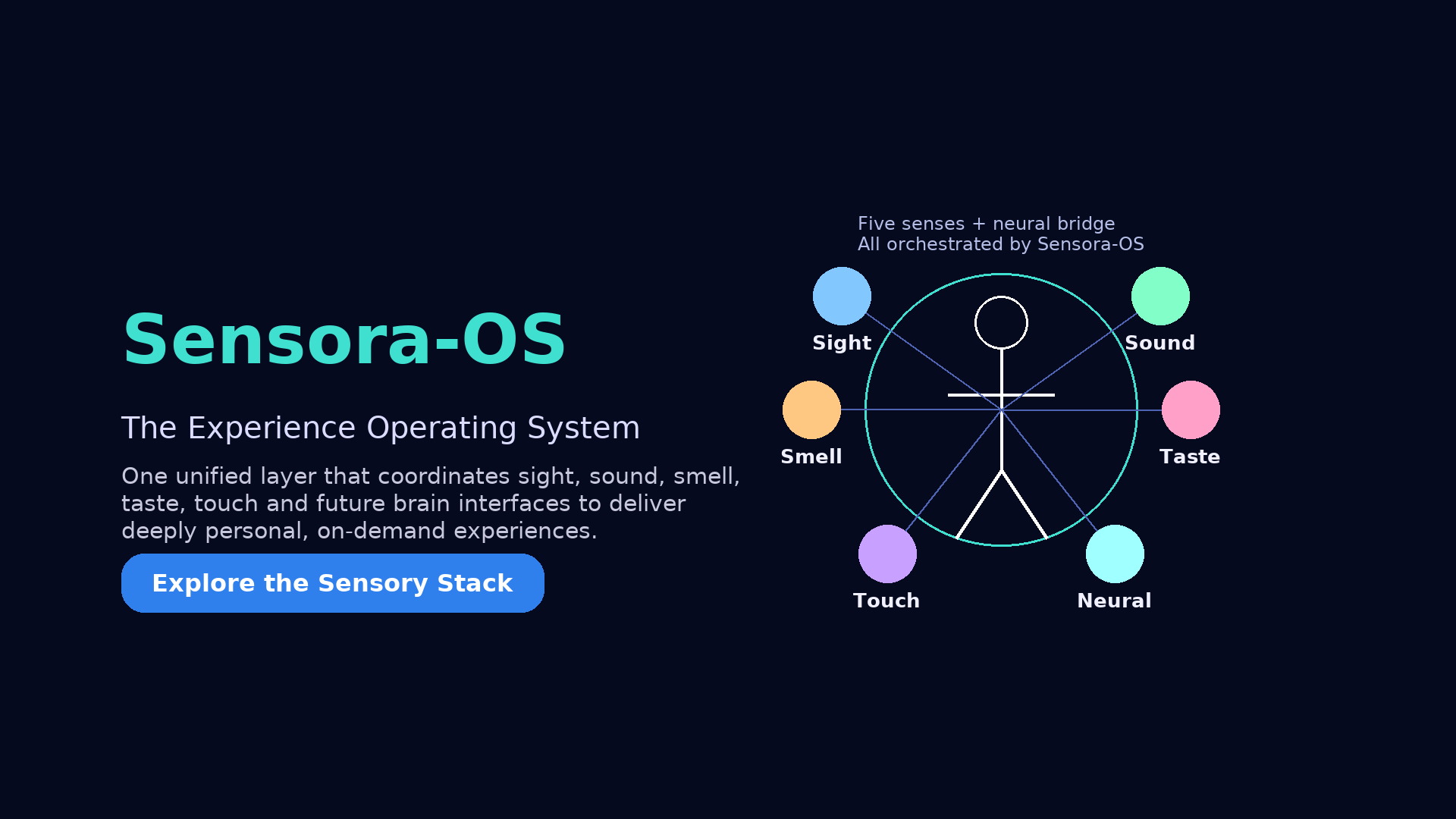

Sensora-OS: The Unified Platform

From Signals to Experiences: A Unified Semantic Topology

Current multisensory systems operate on a "peripheral model." A VR headset renders light; a haptic vest renders vibration; an olfactory dispenser releases chemical bursts. These devices have no shared understanding of the reality they are trying to simulate.

If a user sees a virtual fire but the thermal feedback lags by 200ms, or the smell of smoke persists after the fire is extinguished, immersion is broken and cognitive load increases.

Sensora-OS inverts this model. Instead of applications driving devices directly, they drive the Experience Operating System, which orchestrates the hardware to maintain a cohesive sensory state.

At the kernel level, Sensora-OS does not process "video" or "audio" files. It processes Experience Graphs.

An Experience Graph is a directed acyclic graph (DAG) where:

This allows the OS to "look ahead" and pre-load chemical cartridges or pre-tension haptic actuators, ensuring perfect synchronization.

Figure: Sensory data flows from the brain through the Experience Graph to distributed hardware endpoints

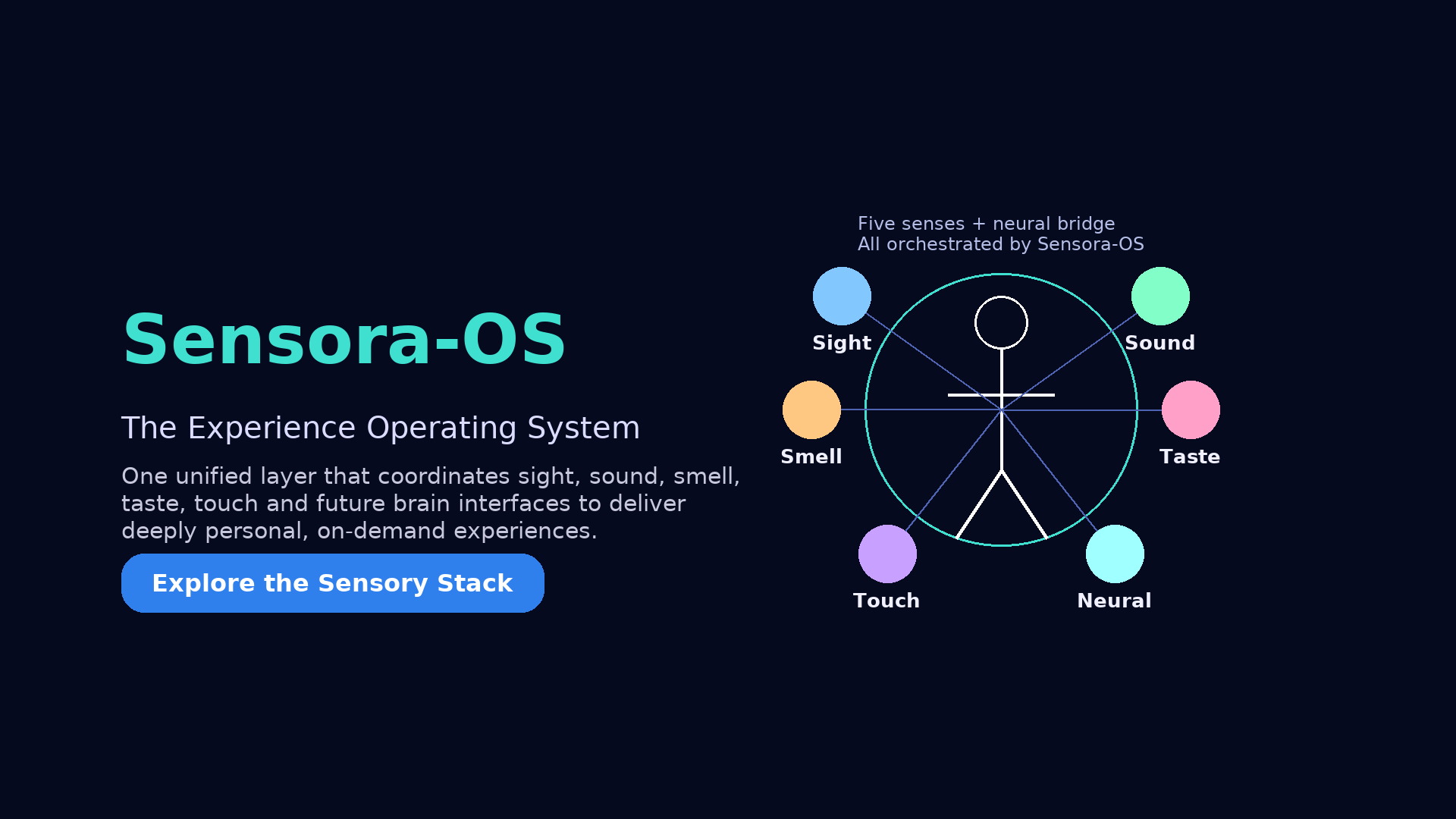

Unlike a standard OS, Sensora-OS interacts directly with human physiology. This requires a dedicated safety kernel known as the Personal Sensory Profile (PSP).

The PSP acts as a "biological firewall" between the application and the user. It enforces:

Continuous biometric monitoring ensures user safety

Sensora-OS is hardware-agnostic. It uses a standard Hardware Abstraction Layer (HAL) to translate high-level experience commands into device-specific machine code.

The HAL bridges software and diverse hardware platforms

We categorize sensory endpoints into five primary classes:

AR/VR Headsets & Smart Glasses

Reference: Sensora VISTA

Spatial Audio Emitters & Bone Conduction

Reference: Sensora AURIS

Vibrotactile Suits, E-Skin, & Thermal Weaves

Reference: Sensora HAPTI

Micro-fluidic Scent Dispensers

Reference: Sensora AROMA

Digital Taste Actuators

Reference: Sensora GUSTO

For the mathematical formalism of the Experience Graph and the API specifications for the

HAL,

please consult the full reference document.